Over the past decade, neural speech tracking has grown from a niche analytical tool into one of the most reliable ways to study how the brain processes natural, continuous speech. But what exactly does this mean, and why has the method gained so much momentum?

In this article, we’ll provide an accessible overview of neural speech tracking, explain how it works, and explore why it has become such a powerful approach for understanding how our brains make sense of spoken language in real-world conditions.

What is “neural speech tracking”?

When we listen to someone speaking, our brain doesn’t just receive the sounds passively. It actively locks onto the rhythm and patterns of speech, almost like tapping your foot along to music. This process is what scientists call neural speech tracking.

Traditionally, neuroscience experiments used very artificial sounds, like single tones or isolated syllables because they were easy to control. But of course, real communication is not a beep or a simple syllable. It’s a flowing, complex stream of words, pauses, and intonation. That’s why researchers today prefer to work with continuous, natural speech, such as recordings of stories, conversations, or podcasts.

The question is: how do we know if the brain is truly “following” that speech? This is where temporal response functions (TRFs) and similar models come in. A TRF is a kind of mathematical map that links features of the speech (e.g., how loud it is at a given moment) to the brain’s electrical response measured with EEG. If the brain activity rises and falls in sync with the ups and downs of the speech signal, it means the brain is tracking that information.

Recent years have shown that these models are no longer fragile or experimental. Systematic reviews confirm that TRF-based speech tracking is robust across age groups and hearing profiles. We also understand better how context shapes it: in some cases, background noise doesn’t just hinder tracking, it sharpens it, because the brain has to work harder to focus. At the same time, analysis toolboxes and best practices have stabilized, so researchers can approach the technique with more confidence and consistency than before.

In short, speech tracking is like checking whether the brain is in sync with the conversation. And because we can do this with real, everyday speech, it brings research much closer to how we actually use language in our daily lives.

What exactly are we tracking?

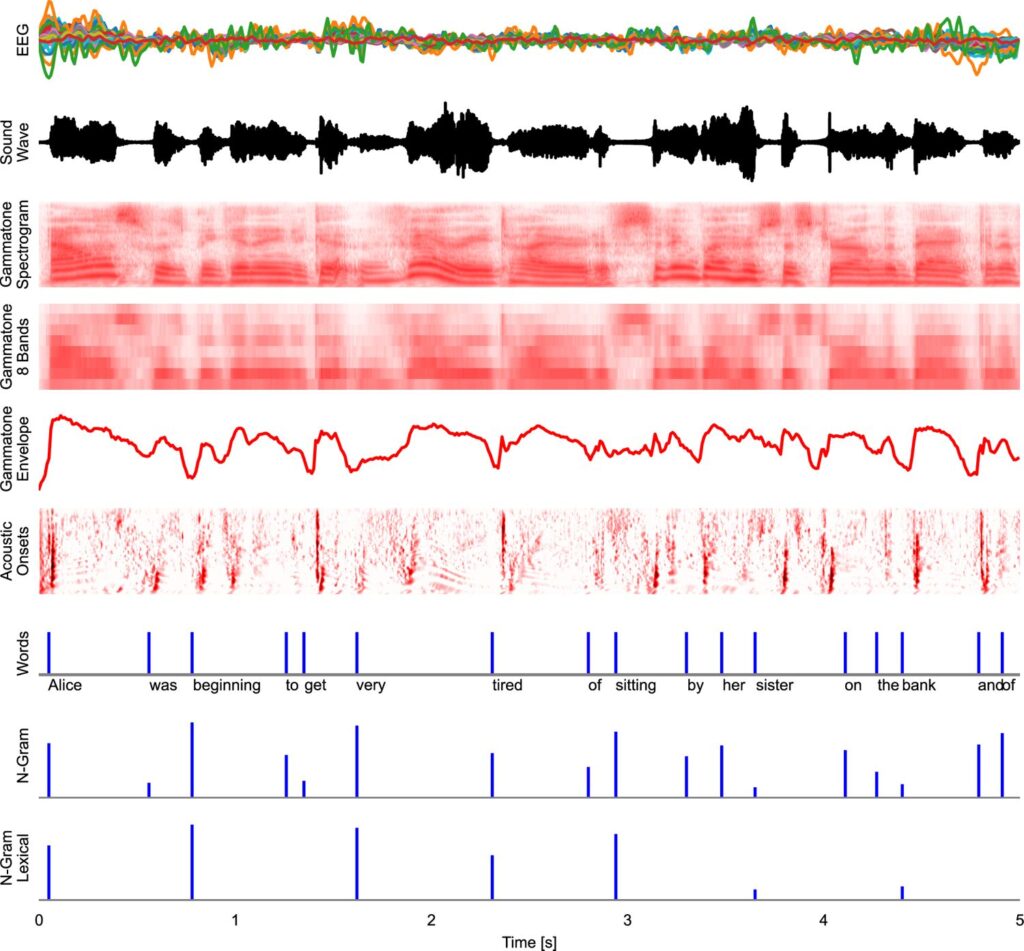

What makes speech tracking powerful is that we can use different features of speech as inputs. We might test whether the brain follows the speech envelope (the overall loudness pattern), the spectral features (the energy at different frequencies), the syllable rhythm, or even the moments when words begin.

A TRF model is only as informative as the features (or predictors) you feed into it. By comparing how well each of these features aligns with the brain’s activity, we can start to see not only that the brain is following speech, but which parts of speech it cares about most. Importantly, multiple predictors can be modeled together to obtain an additive response known as multivariate temporal response function (mTRF).

But which features of speech do scientist usually study?

Acoustic and visual physical properties

1. Speech Envelope

The most common feature studied has been the speech envelope, sometimes also called amplitude modulation. This is essentially the rise and fall of loudness over time. If you imagine looking at a sound wave and tracing its outline with a marker, that outline is the envelope. It tells us where the speech is louder and where it dips, and the brain tends to follow this rhythm very closely, because it captures the basic beat of spoken language.

From a technical perspective, the envelope is often extracted using the Hilbert transform. This method creates an “analytic signal” from the audio waveform, and by taking the absolute value (the instantaneous magnitude), we get a smooth curve that represents how the overall amplitude changes over time. In practice, this is how researchers isolate the slow modulations in speech that the brain tracks so reliably.

2. Spectrogram

Another widely used predictor is the spectrogram. Unlike the envelope, which reduces speech to a single curve of loudness, the spectrogram shows how energy is distributed across different frequencies over time. You can think of it as a kind of heat map: along one axis is time, along the other is frequency, and the colors show how much energy is present at each moment and pitch range.

To compute a spectrogram, the speech signal is passed through a bank of bandpass filters that mimic how the human ear analyzes sound. Common choices are gammatone filters or gammachirp filters, which are designed to model the frequency selectivity of the cochlea. Each filter isolates a narrow frequency band (for example, low, mid, or high pitch regions), and then the envelope of each band is calculated, usually again via the Hilbert transform. Putting all those band-specific envelopes together produces the spectrogram, a rich, multi-dimensional representation of speech.

The envelope and spectrogram are complementary. The envelope captures the broad rhythm of speech while the spectrogram reveals the fine-grained distribution of sound energy across frequencies. Both are useful predictors, and both have been shown to align with the brain’s neural responses to speech, albeit in slightly different ways.

3. Acoustic onsets

Capture the precise moments when new sounds begin, for example, the sudden start of a syllable or a burst of consonant. Our auditory system is very sensitive to these “edges” in sound, because they often signal important events like a speaker starting a new word.

To model this, researchers start with a gammatone spectrogram, which is a representation of sound energy across different frequencies, built using filters that mimic how the human cochlea responds to sound. From there, a neural edge-detection model is applied. This model highlights the sudden changes and suppresses the steady or unchanging parts.

The result is an acoustic onset spectrogram: a time–frequency map where only the beginnings of sounds stand out clearly. In EEG studies, these acoustic onsets are used as predictors because the brain shows particularly strong responses to the starts of sounds, making them an important cue for speech tracking.

4. Lip movements

But speech is more than volume changes. We also use our eyes to help us, by watching the speaker’s lips. The brain tracks visual cues, such as how much the mouth opens vertically or stretches horizontally, and these movements help us understand speech better, especially in noisy environments. When a listener can see lip movements, tracking strengthens; when the mouth is covered by a mask, it weakens. These subtle modulations capture something deeply relevant to real-world communication, where speech is almost always audio-visual.

Phonological/linguistic properties

Beside physical properties of sound, language comes with its own building blocks, and the brain tracks those too. When we decompose natural speech into its linguistic features, we see that the brain responds at several levels of structure.

1. Phonemes onset

At the most basic level are phonemes, the smallest units of sound that distinguish words. For example, the difference between bat and pat is just one phoneme. Neural tracking studies show that the brain produces clear responses when phonemes occur, almost like tiny checkpoints in the speech stream.

2. Words onset

On a larger scale, the brain also locks onto words as they begin. Word onsets act as anchors in continuous speech, helping listeners segment the flow of sound into meaningful units. This is crucial because, unlike written language, spoken speech does not come with spaces between words. The brain has to carve them out in real time.

3. Surprisal

Word’s surprisal is how unexpected a word is in this context. Words that are more surprising produce stronger predicted brain responses. This is usually obtain using N-gram language models. N-grams are sequences of n words taken from running text or speech. For example, in the sentence “the cat sat on the mat,” the 2-grams (or “bigrams”) are “the cat,” “cat sat,” “sat on,” and so on. N-gram language models use these sequences to estimate how likely a word is to appear, given the words that came before it.

In speech tracking research, an n-gram predictor is often defined as an impulse placed at the onset of each word, and its size is scaled by its surprisal value.

4. Lexical surprisal

A related measure is n-gram lexical surprisal, which applies the same idea but only to content words (nouns, verbs, adjectives, adverbs). Since function words like “the” or “and” are highly predictable and carry less meaning, this predictor focuses only on the surprising or informative words, which tend to drive stronger neural responses.

By measuring how neural responses align with phonemes, words, and surprisal levels, researchers can move beyond the idea that the brain is merely echoing sound energy. Instead, they can demonstrate that the brain is actively decoding the structure and meaning of language as it unfolds.

In summary, by testing all these predictors side by side, scientists can disentangle whether a listener is mainly locked onto the raw sound, the visible movements, or the higher-level linguistic content. This allows to answer several questions, for instance:

- Is the brain locking onto sound energy, or higher-level linguistic categories?

- Do listeners with hearing loss rely more on visual cues?

- How does selective attention modulate the tracking of one speaker’s words versus another’s?

This ability to flexibly model predictors allows us to ask not only whether the brain tracks speech, but also what aspects of language it prioritizes.

Following attention in complex scenes

One of the most fascinating applications of speech tracking is in situations where there are multiple people talking at once, the classic cocktail party problem. Imagine standing in a busy reception hall, with music in the background, clinking glasses, and several conversations happening around you. Despite all that chaos, you can usually focus on one person’s voice and follow what they are saying.

The brain’s ability to do this is remarkable, and speech tracking methods can now reveal how it works. When researchers record EEG while a person listens to two simultaneous speakers, they can model how closely the brain’s electrical activity aligns with the speech signal of each talker. What they find is striking: the brain rhythmically locks onto the person you are paying attention to, while the ignored speaker’s voice leaves only a faint trace.

This means selective attention leaves a kind of neural fingerprint. It’s not just a subjective feeling of “I’m focusing on her story, not his.” We can see it in the data: the attended voice resonates much more strongly in the EEG than the unattended one.

Why is this exciting?

Because it transforms attention into something measurable. In the future, this could power smarter hearing aids that detect which voice you are tuned into and amplify that person automatically, helping people with hearing difficulties navigate noisy social environments. It also opens doors in research and clinical practice. For instance, we can now ask how attention tracking changes with age, how it differs in children versus adults, or how disorders like ADHD affect the ability to lock onto one speaker in a crowd.

In other words, neural speech tracking allows us to peek into one of the brain’s most impressive everyday tricks: finding the signal in the noise.

TRF under the hood: how to estimate it

As we said before, temporal response function (TRF) is a mathematical model that allows us to estimate the relationship between stimulus (i.e., speech envelope) and brain response (EEG signal). But how do researchers actually build these models of brain-speech alignment? Two approaches have become the most widely used:

1. Linear regression

Used in the well-known mTRF Toolbox. Here the idea is simple: you take the speech feature (say, the envelope) and try to find the set of weights that, when combined, best predict the EEG signal. Because EEG is noisy and complex, a regularization step (usually ridge regression) is added to keep the model from overfitting.

The strength of this method is that it’s straightforward, efficient, and great for comparing predictive accuracy across conditions. Its downside is that it can sometimes blur fine details in timing, and it is also sensitive to collinearity, when two predictors are very similar, their contributions can get mixed together.

2. Boosting algorithm

Implemented in toolboxes like EELBRAIN. Instead of estimating all the weights in one shot, boosting builds the model step by step. It starts by testing small contributions from different predictors and only keeps the ones that genuinely improve the model’s fit to the EEG. Over time, this produces a sparse model where only the strongest features remain. Because of this incremental pruning, boosting is much less sensitive to collinearity, making it especially useful when you want to compare predictors that are similar to each other. The result is often a sharper, cleaner TRF, where peaks stand out clearly in time. The trade-off is that it takes more computation and, in some contexts, may not maximize predictive power as well as ridge regression.

In practice, both methods usually lead to the same big-picture conclusions: they show us whether and when the brain is following speech. But they highlight different strengths:

- Regression for prediction benchmarks

- Boosting for interpretability and robustness

Beyond the lab: Mobile EEG and Speech Tracking

One of the most exciting frontiers is combining speech tracking with mobile EEG systems like BrainAccess. Traditionally, experiments had to be run in quiet, controlled sound booths, with bulky lab equipment. But real communication doesn’t happen in a booth, it happens in cafés, classrooms, hospitals, or even on the street. Mobile EEG makes it possible to bring speech tracking into these natural settings.

This shift has several advantages.

- It allows us to study listening in the environments that really matter, where background noise, movement, and visual cues all interact.

- It opens the door to personalized assistive technologies: imagine a hearing aid that doesn’t just amplify everything equally, but detects whom you are paying attention to and enhances that person’s voice. With portable EEG, this idea becomes far more feasible, since the technology can move with the listener.

- There’s also a major benefit for research. Mobile EEG lets scientists test larger, more diverse groups of people outside the lab such as children in classrooms, older adults at home, or patients in clinics. That means more ecologically valid data, more realistic benchmarks, and faster progress in understanding how the brain handles speech in everyday life.

Looking ahead, mobile speech tracking could power entirely new applications: brain-based measures of listening effort in workplaces, adaptive audio systems for augmented reality, or even neurofeedback tools that help people train their focus in noisy settings. In short, by pairing TRF models with mobile EEG, we move one step closer to bridging the gap between neuroscience and the realities of daily communication.

Conclusions

Neural speech tracking has matured into a tool that can provide ecologically valid benchmarks for how the brain processes speech in real-life conditions.

For researchers, it has become a trusted measure of attention, listening effort, and language research in naturalistic contexts.

For clinicians, it offers the possibility of more natural assessments that go beyond the artificial sound booth.

For technology developers, it points the way toward smarter, brain-informed solutions for navigating the noisy, complex soundscapes of daily life.

We’re excited to see how this technology will continue to evolve and, with the advancement of mobile EEG devices like BrainAccess, how it will find impactful applications in real-world scenarios.

Reference

- Panela, R. A., Copelli, F., & Herrmann, B. (2024). Reliability and generalizability of neural speech tracking in younger and older adults. Neurobiology of Aging, 134, 165-180. https://doi.org/10.1016/j.neurobiolaging.2023.11.007

- Herrmann, B. (2025). Enhanced neural speech tracking through noise indicates stochastic resonance in humans. eLife, 13, RP100830. https://doi.org/10.7554/eLife.100830.3

- Crosse, M. J., Di Liberto, G. M., Bednar, A., & Lalor, E. C. (2016). The multivariate temporal response function (mTRF) toolbox: a MATLAB toolbox for relating neural signals to continuous stimuli. Frontiers in human neuroscience, 10, 604. https://doi.org/10.3389/fnhum.2016.00604

- Brodbeck, C., Das, P., Gillis, M., Kulasingham, J. P., Bhattasali, S., Gaston, P., … & Simon, J. Z. (2023). Eelbrain, a Python toolkit for time-continuous analysis with temporal response functions. Elife, 12, e85012. https://doi.org/10.7554/eLife.85012

- Straetmans, L., Holtze, B., Debener, S., Jaeger, M., & Mirkovic, B. (2022). Neural tracking to go: auditory attention decoding and saliency detection with mobile EEG. Journal of neural engineering, 18(6), 066054. https://doi.org/10.1088/1741-2552/ac42b5

- Straetmans, L., Adiloglu, K., & Debener, S. (2024). Neural speech tracking and auditory attention decoding in everyday life. Frontiers in human neuroscience, 18, 1483024. https://doi.org/10.3389/fnhum.2024.1483024

Martina Berto, PhD

Research Engineer & Neuroscientist @ Neurotechnology.

Martina Berto, PhD

Research Engineer & Neuroscientist @ Neurotechnology.