For over a decade, Lab Streaming Layer (LSL) has quietly transformed the way neuroscience and biomedical researchers collect and synchronize data. From brain-computer interfaces (BCI) to hyperscanning and Mobile Brain/Body Imaging (MoBI), LSL has become the de facto standard for streaming multimodal biosignals in real time.

Now, this essential open-source framework has received its long-awaited official reference paper, published in Imaging Neuroscience (Kothe et al., 2025)

What is LSL?

The Lab Streaming Layer (LSL) is an open-source networked middleware ecosystem designed to stream, receive, synchronize, and record data acquired from different types of sensors, including EEG, Eye-tracker, and behavioral data. Originally created by Christian Kothe while working at the Swartz Center for Computational Neuroscience at the University of California, the project is now maintained by an international team of developers and receives contributions from its large community.

Why is it becoming so popular?

When running neuroscience experiments, researchers often need to record data from many sources at once:

- EEG, fNIRS, or MEG signals from the brain

- Eye tracking or motion capture for behavior

- EMG or heart rate for physiology

- Stimulus presentation markers or environmental sensors

Each of these devices usually runs on its own clock, making it difficult (and often expensive) to synchronize them accurately. Traditionally, labs relied on hardware triggers (like TTL pulses or shared clocks), but these solutions are not always feasible, especially for mobile, wearable, or consumer-grade devices like BrainAccess.

This is where LSL comes in. LSL is a software-based framework that:

- Attaches precise timestamps to every data sample.

- Synchronizes clocks across devices using a network protocol inspired by NTP.

- Compensates for delays and jitter so data can be aligned on a common timeline.

- Provides a unified API for streaming data in real time and recording to the XDF format, a flexible multimodal file standard.

In practice, this means researchers can plug together EEG amplifiers, eye trackers, motion sensors, and stimulus computers over a local network, and LSL ensures that all data streams line up to within sub-millisecond precision.

How LSL Works – From Overview to Design

Thanks to its layered design, LSL hides the complexities of time synchronization and networking from users, while still offering flexibility and reliability across diverse setups.

System Overview

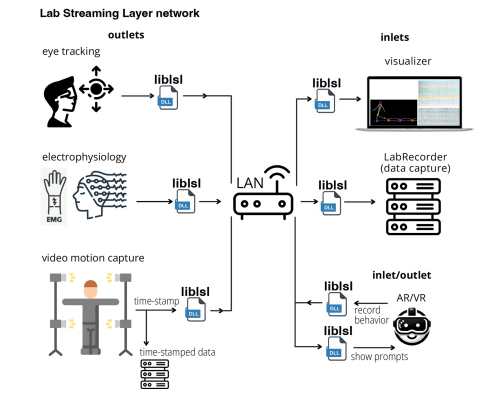

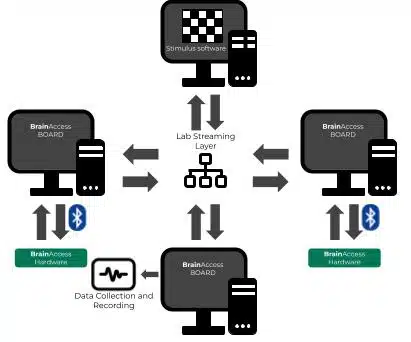

Different acquisition devices (EEG amplifiers, EMG sensors, cameras, etc.) can publish their data through an LSL outlet. These outlets send data into the LSL network layer, which automatically takes care of stream discovery, synchronization, and jitter correction over a local network.

Applications (such as real-time analysis tools, visualization dashboards, or stimulus software) connect via LSL inlets to receive the synchronized streams, while a LabRecorder combines all active streams into a single XDF file with timing metadata for offline analysis.

LSL Design

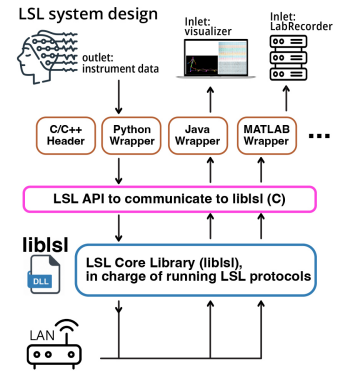

Internally, LSL is built on a layered design that balances accessibility with technical robustness.

At the surface, researchers and developers interact with a unified API that is available in many programming languages, from Python and MATLAB to C++ and Rust, making it straightforward to connect different devices and applications without needing to master the complexities of network programming.

Beneath this interface lies the core library, liblsl, written in C++, which acts as the engine of the system, managing data flow, time synchronization, and stream coordination across machines.

Supporting this core are a set of well-defined protocols that govern how data streams are discovered, subscribed to, transmitted, and annotated with metadata, while also keeping their timing aligned. Together, these elements make LSL resilient to network interruptions and capable of maintaining synchronization accuracy at the sub-millisecond level, which is essential for neuroscience experiments where precise timing is everything.

Highlights from the new reference paper

In their newly published paper, Kothe and colleagues (2025) set out to document and validate LSL after more than a decade of widespread use. Their goal was to give the community a clear technical reference: how LSL works, how well it performs, and what users need to be aware of when applying it to multimodal research.

To achieve this, the authors tested LSL’s synchronization accuracy in both local and networked setups, comparing professional-grade EEG/EMG hardware with consumer-grade devices like webcams.

Moreover, they provided practical guidance on measuring hardware-induced delays (“setup offsets”) that LSL cannot directly correct, including a summary of common pitfalls (e.g., irregular sampling from consumer devices, wireless congestion) and configuration tweaks to maintain accuracy.

Key Findings

The new reference paper shows that LSL delivers sub-millisecond synchronization accuracy on standard hardware, making it more than capable of supporting demanding multimodal neuroscience experiments. The authors also demonstrate its robustness to network failures, with streams automatically reconnecting and data continuity being preserved. Moreover, they showed LSL’s ability to scale gracefully from small laboratory setups to large, distributed experiments with dozens of simultaneous devices.

Taken together, the findings make a strong case for why LSL has become a cornerstone of modern neuroscience. The framework allows researchers to integrate a wide variety of biosignal streams in real time, while also consolidating recordings into the versatile XDF format, which simplifies analysis, sharing, and reproducibility.

At the same time, the authors are clear about its boundaries: LSL cannot directly account for hardware-internal delays, which must be measured by the user, and some irregular or high-bandwidth devices (such as webcams or raw HD video) may require extra care to maintain timing accuracy.

What stands out is not only the technical rigor but also the clarity with which the authors present LSL’s architecture, benchmarks, and practical guidance. Their work elevates LSL from a widely used tool to a well-documented, validated reference point for the community, a resource that will guide researchers, developers, and engineers for years to come.

Why this matters for EEG Research (and for BrainAccess)

For anyone working with EEG, synchronization is a necessity. Brain signals are fast, subtle, and easily confounded if their timing does not align precisely with external events such as stimulus onsets, motor actions, or environmental changes. Even a few milliseconds of drift can make the difference between detecting a meaningful brain response and missing it altogether.

This is why the publication of the LSL reference paper is so important for the EEG community at large: it confirms that researchers can rely on a software framework that has been tested and validated to deliver the level of temporal precision that modern experiments demand. By providing a common language for data streams, LSL lowers the technical barriers that once made multimodal neuroscience an undertaking reserved for highly specialized labs with expensive hardware.

Today, EEG can be combined seamlessly with motion capture, eye tracking, or VR environments, opening the door to richer and more ecologically valid paradigms.

For BrainAccess, this development resonates particularly strongly. Our mission has always been to make EEG more accessible without compromising on scientific rigor, and native integration with LSL is a key part of that philosophy. By streaming BrainAccess data directly into LSL, researchers can connect our devices with other tools they already use, whether that means synchronizing EEG with stimulus presentation software, logging behavioral responses, or combining multiple BrainAccess headsets for hyperscanning studies. It means that our users are not locked into a proprietary ecosystem but can instead participate in the growing open infrastructure of neuroscience.

The fact that LSL now has a peer-reviewed reference paper not only validates the technology itself but also strengthens the ecosystem in which BrainAccess operates, ensuring that our users can build their projects on a foundation that is both robust and recognized by the scientific community.

Conclusion

Lab Streaming Layer has long been the quiet enabler of modern neuroscience, making it possible to collect and synchronize streams of EEG and other biosignals with the precision needed for real-world experiments.

By attaching accurate timestamps, handling clock drift and jitter, and unifying diverse devices through a common interface, LSL has allowed researchers to focus on discovery rather than troubleshooting.

The publication of its long-awaited reference paper marks a turning point: it formally validates a framework that has already transformed the field and provides the community with a clear, authoritative resource to build upon. For EEG research in general, this recognition confirms that complex, multimodal experiments can be run with confidence using a software-based approach.

For BrainAccess, it reinforces the decision to integrate LSL at the core of our devices and software, ensuring that our users stand on a foundation that is both technically robust and scientifically recognized. With this validation, LSL is no longer just the invisible backbone of neuroscience, it is an acknowledged standard that will continue to shape the way we study and interact with the brain.

Find out more about LSL!

Reference

Kothe, C., Shirazi, S. Y., Stenner, T., Medine, D., Boulay, C., Grivich, M. I., … & Makeig, S. (2025). The lab streaming layer for synchronized multimodal recording. Imaging Neuroscience, 3, IMAG-a. https://doi.org/10.1162/IMAG.a.136

Martina Berto, PhD

Research Engineer & Neuroscientist @ Neurotechnology.

Martina Berto, PhD

Research Engineer & Neuroscientist @ Neurotechnology.